Bullet-time photography using Raspberry Pi cameras?

We started to work on a PiCam version of our multi-camera software a few months ago, but that had nothing to do with our usual bullet-time work. It was mostly about photogrammetry, which should look like our DSLR system, with much smaller cameras. And that part is ready.

But as we had the equipment and the software I got interested to see how far we could push it with bullet-time and what kind of image quality I could get out of these tiny cameras. My main interest here is the size of them as they can be put super close to each other, giving me my best density ever on a multi-camera structure.

Now just as with our Android or DSLR edition, this works with a dashboard to change the camera settings and output settings. We also have our usual instant playback player, our gallery, and tons of modules.

On the first iteration of the kit, I couldn't get a good calibration as the PiCam lenses are mounted on a thermal paste and can't be placed with precision. This is what I got from this first kit. It is quite shaky. The problem is that these are very wide lenses, and with the distortion, I really need to have all of the cameras to look at the same center spot. But as I got more hooked about the end results I was getting, we tried to see if we could get rid of that paste to stick the lenses directly to the small boards. It's not perfect, but it's getting close. This is my actual kit!

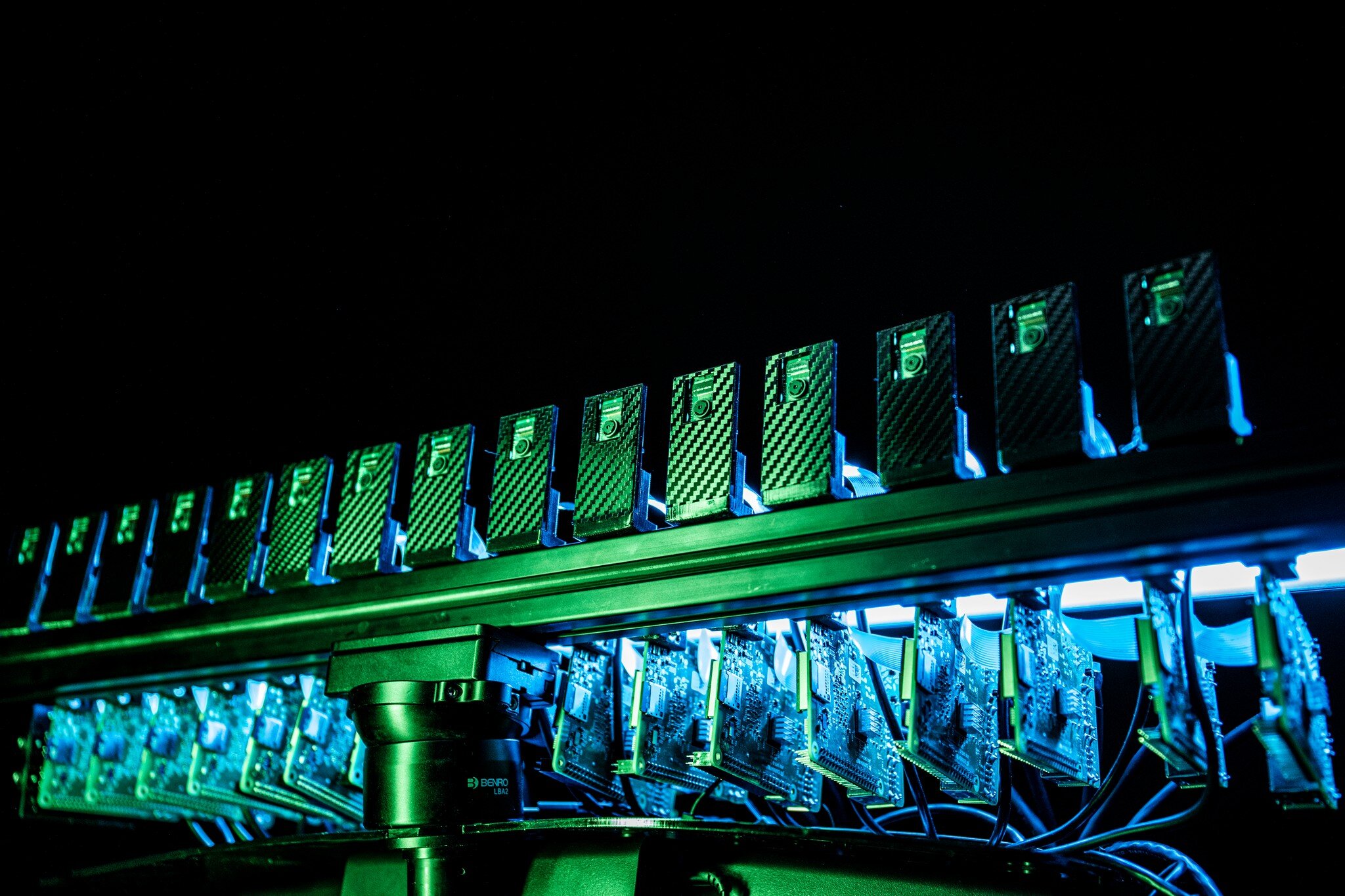

For all of my tests using continuous light, I'm using 15 PiCam on 15 Raspberry Pis 3b+ with no custom electronic components. Each Pi is sending its data through ethernet cables to the switches to the laptop. My trigger is a simple bluetooth powerpoint presenter. But for my shots using an external strobe, we had to design a little PCB to send the signal to my speedlite. The board is on the last pi, and linked to the flash via an audio cable and a hot shoe adapter. Any kind of flash can work for this.

The Picams board are mounted on laser cut acrylic plates which connect to an extruded aluminum bar via these 3d printed adapters. Each

I did this project as a cool experimentation, and this is never going to replace my DSLRs, but this is opening the door to different ways of using this technology.

*** UPDATE October 4, 2020

This project has been published on hackaday.com and a few of the comments we quite interesting to read. I was not expecting that kind of crowd to step in and I’m quite happy to start this discussion with folks out of my usual circle. Here’s a detailed answer to this comment

Scott says:

I don’t think the Pi’s are recoding video – they are each snapping one still photo when Eric presses the Bluetooth clicker in his hand. The still photos are stitched together to create the “bullet time” view. It seems like the custom software that is controlling all of the Pi’s is sending a broadcast packet when Eric triggers the clicker. If you watch the video closely, the Ethernet activity LED flashes once on every Pi at the same instant when the photo is taken. Pretty ingenious solution. :-)

Scott, you are right, we’re snapping one still photo out of each PiCam at the same time. On our first iteration, we were grabbing a frame from a movie, but this technique doesn’t work very well with the PiCam HQ as we can’t cope with the 13mp in continuous on video. Going to “still” frames was the way to go to have a system that works both on the PiCam v2 and the Pi Camera HQ.

Now, what about the accuracy of the triggering? We know that we can send a signal in sync in under one millisecond precision to all Raspberry Pis, and this example shows pretty well how great it can be when working with proper equipment. This has been made using 154 Canon SL1 with constant light with 4 cameras per Pi (39 Raspberry Pi units). But this is something that can be achieve only with DSLR cameras or some recent mirrorless (it works well with the EOS-R but not with the M6 MkII). For everything else (Raspberry Pi, Android smartphones, GoPro, etc), we’re stuck with shutter issues where the framerate is not sync (gen locked) across all cameras. That usually means an imprecision of about 1/60s, which far from being enough to freeze a subject (we need 1ms precision!).

So how have I been able to freeze myself on these shots? I’m simply using that old trick of popping my strobe within the exposure at a “safe” time. For example. if my shutter speed is 1/2s, then I would flash 1/4s after the initial trigger signal, giving enough range for all of the units to be exposing when the light goes on. It was quite easy to perform as I have that board which was making everything automatic. But last year, I had a hard time doing the same thing with Android phone where I needed to manually flash within my one second exposure shot (more examples on the right side bar on SlimRig.com)

Project image gallery